Abstract

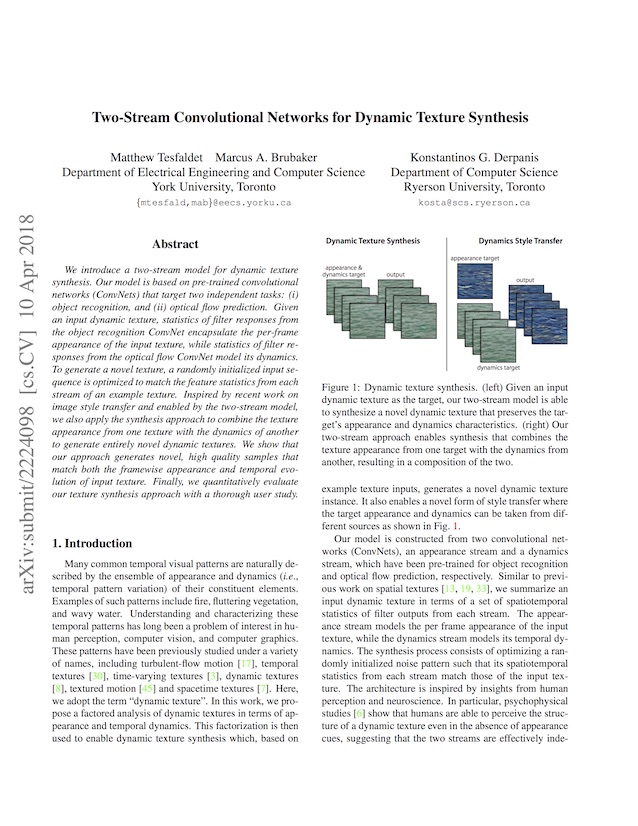

We introduce a two-stream model for dynamic texture synthesis. Our model is based on pre-trained convolutional networks (ConvNets) that target two independent tasks: (i) object recognition, and (ii) optical flow prediction. Given an input dynamic texture, statistics of filter responses from the object recognition ConvNet encapsulate the per-frame appearance of the input texture, while statistics of filter responses from the optical flow ConvNet model its dynamics. To generate a novel texture, a randomly initialized input sequence is optimized to match the feature statistics from each stream of an example texture. Inspired by recent work on image style transfer and enabled by the two-stream model, we also apply the synthesis approach to combine the texture appearance from one texture with the dynamics of another to generate entirely novel dynamic textures. We show that our approach generates novel, high quality samples that match both the framewise appearance and temporal evolution of input texture. Finally, we quantitatively evaluate our texture synthesis approach with a thorough user study.

Material

Dynamic Texture Synthesis

We applied our dynamic texture synthesis process to a wide range of textures which were selected from the DynTex database as well as others collected in-the-wild. Here we provide synthesized results of nearly 60 different textures that encapsulate a range of phenomena, such as flowing water, waves, clouds, fire, rippling flags, waving plants, and schools of fish. Unless otherwise stated, both target and synthesized dynamic textures consist of 12 frames.

The top row consists of the target dynamic textures while the bottom row consists of the synthesized dynamic textures. Scroll horizontally to view more.

Inputs which follow the underlying assumption of a dynamic texture, i.e., the appearance and/or dynamics are more-or-less spatially/temporally homogeneous, allow for better synthesized results. Inputs which do not follow the underlying assumption of a dynamic texture may result in perceptually implausible synthesized dynamic textures.

Click on a thumbnail to play the dynamic texture.

ants

bamboo

birds

boiling_water_1

boiling_water_2

calm_water

calm_water_2

calm_water_3

calm_water_4

calm_water_5

calm_water_6

candle_flame

candy_1

candy_2

coral

cranberries

escalator

fireplace_1

fireplace_2

fish

flag

flag_2

flames

flushing_water

fountain_1

fountain_2

fur

grass_1

grass_2

grass_3

ink

lava

plants

sea_1

sea_2

sea_3

sea_4

shiny_circles

shower_water_1

sky_clouds_1

sky_clouds_2

smoke_1

smoke_2

smoke_3

smoke_plume_1

snake_1

snake_2

snake_3

snake_4

snake_5

tv_static

underwater_vegetation_1

water_1

water_2

water_3

water_4

water_5

waterfall

waterfall_2

Synthesis w/o the Dynamics Stream

To validate that the texture generation of multiple frames would not induce dynamics consistent with the input, we generated frames starting from randomly generated noise but only using the appearance statistics and corresponding loss. As expected, this produced frames that were valid textures but with no coherent dynamics present.

The top row consists of the target dynamic textures while the bottom row consists of the synthesized textures.

fish

Incremental Synthesis

Dynamic textures generated by incremental synthesis. Long sequences can be incrementally generated by separating the sequence into sub- sequences and optimizing them sequentially. This is realized by initializing the first frame of a subsequence as the last frame from the previous subsequence and keeping it fixed throughout the optimization. The remaining frames of the subsequence are initialized randomly and optimized as usual.

The top row consists of the target dynamic textures while the bottom row consists of the synthesized dynamic textures.

calm_water_4

calm_water_5

fish

flag

sky_clouds_1

smoke_1

water_5

Temporally-endless Textures

An interesting extension that we briefly explored are textures where there is no discernible temporal seam between the last and first frames. Played as a loop, these textures appear to be temporally endless. This is trivially achieved by adding an additional loss to the dynamics stream that ties the last frame to the first.

The top row consists of the target dynamic texture while the bottom row consists of the synthesized "infinite" dynamic texture.

smoke_plume_1

Dynamics Style Transfer

The underlying assumption of our model is that the appearance and dynamics of a dynamic texture can be factorized. As such, it should allow for the transfer of the dynamics of one texture onto the appearance of another. This has been explored previously for artistic style transfer with static imagery. We accomplish this with our model by performing the same optimization as usual, but with the target Gram matrices for appearance and dynamics computed from different textures. In effect, the static texture is animated and hence brought to life. To the best of our knowledge, we are the first to demonstrate this form of style transfer.

The top row consists of the appearance target, the second row the dynamics target, and the third row the synthesized result.

fireplace_1_to_fire_paint

flag_2_to_flag_cropped_1

flag_2_to_flag_cropped_2

water_4_to_water_img

Portions of images can be animated.

waterfall_to_waterfall_paint

water_4_to_water_paint

Citation

Mattie Tesfaldet, Marcus A. Brubaker, and Konstantinos G. Derpanis. Two-stream convolutional networks for dynamic texture synthesis. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

Bibtex format:

@inproceedings{tesfaldet2018,

author = {Mattie Tesfaldet and Marcus A. Brubaker and Konstantinos G. Derpanis},

title = {Two-Stream Convolutional Networks for Dynamic Texture Synthesis},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2018}

}